Subjective Questions (With

Answers)

UNIT-I

Introduction to Computer

Networks

Data Communication: When we communicate, we are sharing information.

This sharing can be local or remote. Between individuals, local communication

usually occurs face to face, while remote communication takes place over

distance.

Components:

A

data communications system has five components

1. Message. The message is

the information (data) to be communicated. Popular forms of information include

text, numbers, pictures, audio, and video.

2. Sender. The sender is the

device that sends the data message. It can be a computer, workstation,

telephone handset, video camera, and so on.

3. Receiver. The receiver is

the device that receives the message. It can be a computer, workstation,

telephone handset, television, and so on.

4. Transmission medium. The

transmission medium is the physical path by which a message travels from sender

to receiver. Some examples of transmission media include twisted-pair wire,

coaxial cable, fiber-optic cable, and radio waves

5. Protocol. A protocol is a

set of rules that govern data communications. It represents an agreement

between the communicating devices. Without a protocol, two devices may be

connected but not communicating, just as a person speaking French cannot be

understood by a person who speaks only Japanese.

Data Representation:

Information today comes in

different forms such as text, numbers, images, audio, and video.

Text:

In

data communications, text is represented as a bit pattern, a sequence of bits

(Os or Is). Different sets of bit patterns have been designed to represent text

symbols. Each set is called a code, and the process of representing symbols is

called coding. Today, the prevalent coding system is called Unicode, which uses

32 bits to represent a symbol or character used in any language in the world.

The American Standard Code for Information Interchange (ASCII), developed some

decades ago in the United States, now constitutes the first 127 characters in

Unicode and is also referred to as Basic Latin.

Numbers:

Numbers

are also represented by bit patterns. However, a code such as ASCII is not used

to represent

numbers; the number is directly converted to a binary number to simplify

mathematical operations. Appendix B discusses several different numbering

systems.

Images:

Images

are also represented by bit patterns. In its simplest form, an image is

composed of a matrix of pixels (picture elements), where each pixel is a small

dot. The size of the pixel depends on the resolution. For example, an

image can be divided into 1000 pixels or 10,000 pixels. In the second case,

there is a better representation of the image (better resolution), but more

memory is needed to store the image. After an image is divided into pixels,

each pixel is assigned a bit pattern. The size and the value of the pattern

depend on the image. For an image made of only black and- white dots (e.g., a

chessboard), a I-bit pattern is enough to represent a pixel. If an image is not

made of pure white and pure black pixels, you can increase the size of the bit

pattern to include gray scale. For example, to show four levels of gray scale,

you can use 2-bit patterns. A black pixel can be represented by 00, a dark gray

pixel by 01, a light gray pixel by 10, and a white pixel by 11. There are

several methods to represent color images. One method is called RGB, so called

because each color is made of a combination of three primary colors: red, green,

and blue. The intensity of each color is measured, and a bit pattern is

assigned to it. Another method is called YCM, in which a color is made of a

combination of three other primary colors: yellow, cyan, and magenta.

Audio:

Audio refers to the

recording or broadcasting of sound or music. Audio is by nature different from

text, numbers, or images. It is continuous, not discrete. Even when we use a

microphone to change voice or music to an electric signal, we create a

continuous signal.

Video:

Video refers to the

recording or broadcasting of a picture or movie. Video can either be produced

as a continuous entity (e.g., by a TV camera), or it can be a combination of

images, each a discrete entity, arranged to convey the idea of motion. Again we

can change video to a digital or an analog signal.

Data Flow:

Simplex:

In simplex mode, the

communication is unidirectional, as on a one-way street. Only one of the two

devices on a link can transmit; the other can only receive (see Figure a).

Keyboards and traditional monitors are examples of simplex devices. The

keyboard can only introduce input; the monitor can only accept output. The

simplex mode can use the entire capacity of the channel to send data in one

direction.

Half-Duplex:

In half-duplex mode, each

station can both transmit and receive, but not at the same time. When one

device is sending, the other can only receive, and vice versa The half-duplex

mode is like a one-lane road with traffic allowed in both directions.

When cars are traveling in

one direction, cars going the other way must wait. In a half-duplex transmission,

the entire capacity of a channel is taken over by whichever of the two devices

is transmitting at the time. Walkie-talkies and CB (citizens band) radios are

both half-duplex systems.

The half-duplex mode is used in cases where there is no need for

communication in both directions at the same time; the entire capacity of the

channel can be utilized for each direction.

Full-Duplex:

In full-duplex both stations

can transmit and receive simultaneously (see Figure c). The full-duplex mode is

like a tW<D-way street with traffic flowing in both directions at the same

time. In full-duplex mode, si~nals going in one direction share the capacity of

the link: with signals going in the other din~c~on. This sharing can occur in

two ways: Either the link must contain two physically separate t:nmsmissiIDn

paths, one for sending and the other for receiving; or the capacity of the

ch:arillilel is divided between signals traveling in both directions. One

common example of full-duplex communication is the telephone network. When two

people are communicating by a telephone line, both can talk and listen at the

same time. The full-duplex mode is used when communication in both directions

is required all the time. The capacity of the channel, however, must be divided

between the two directions.

Network

Criteria:

A network must be able to meet a certain number of criteria. The most

important of these are performance, reliability, and security.

Performance:

Performance can be measured

in many ways, including transit time and response time. Transit time is the

amount of time required for a message to travel from one device to another.

Response time is the elapsed time between an inquiry and a response. The

performance of a network depends on a number of factors, including the number

of users, the type of transmission medium, the capabilities of the connected

hardware, and the efficiency of the software. Performance is often evaluated by

two networking metrics: throughput and delay. We often need more throughputs

and less delay. However, these two criteria are often contradictory. If we try

to send more data to the network, we may increase throughput but we increase

the delay because of traffic congestion in the network.

Reliability:

In addition to accuracy of

delivery, network reliability is measured by the frequency of failure, the time

it takes a link to recover from a failure, and the network's robustness in a

catastrophe.

Security:

Network security issues

include protecting data from unauthorized access, protecting data from damage

and development, and implementing policies and procedures for recovery from

breaches and data losses.

PROTOCOLS

AND STANDARDS:

Protocols:

In computer networks,

communication occurs between entities in different systems. An entity is

anything capable of sending or receiving information. However, two entities

cannot simply send bit streams to each other and expect to be understood. For

communication to occur, the entities must agree on a protocol. A protocol is a

set of rules that govern data communications. A protocol defines what is

communicated, how it is communicated, and when it is communicated. The key

elements of a protocol are syntax, semantics, and timing.

Ø

Syntax.

: The term syntax refers to the structure or format of the data, meaning

the order in which they are presented. For example, a simple protocol might

expect the first 8 bits of data to be the address of the sender, the second 8

bits to be the address of the receiver, and the rest of the stream to be the

message itself.

Ø

Semantics.

: The word semantics refers to the meaning of each section of bits. How

is a particular pattern to be interpreted, and what action is to be taken based

on that interpretation? For example, does an address identify the route to be

taken or the final destination of the message?

Ø

Timing.:

The term timing refers to two

characteristics: when data should be sent and how fast they can be sent. For

example, if a sender produces data at 100 Mbps but the receiver can process

data at only 1 Mbps, the transmission will overload the receiver and some data

will be lost.

Question No. 1

Define computer networks? Discuss various types of networks topologies

in computer network. Also discuss various advantages and disadvantages of each

topology.

Answer:-

“Computer network'' to mean a

collection of autonomous computers interconnected by a single

technology. Two computers are said

to be interconnected if they are able to exchange information. The old model of

a single computer serving all of the organization's computational needs has

been replaced by one in which a large number of separate but interconnected

computers do the job. These systems are called computer networks.

Network topologies:

Network topology defined as the

logical connection of various computers in the network.

The six basic network topologies

are: bus, ring, star, tree, mesh and hybrid.

1. Bus Topology:

In bus topology all the computers

are connected to a long cable called a bus. A node that wants

to

send data puts the data on the bus which carries it to the destination node. In

this topology any computer can data over the bus at any time. Since, the bus is

shared among all the computers. When two or more computers to send data at the

same time, an arbitration mechanism are needed to prevent simultaneous access

to the bus.

A bus topology is easy to install

but is not flexible i.e., it is difficult to add a new node to bus. In

addition to this the bus stops functioning even

if a portion of the bus breaks down. It is also very difficult to isolate fault

2. Ring Topology:

In ring topology, the computers are

connected in the form of a ring. Each node has exactly two

adjacent neighbors. To send data to

a distant node on a ring it passes through many intermediate nodes to reach to

its ultimate destination.

A ring topology is as to install

and reconfigure. In this topology, fault isolation is easy because a signal

that circulates all the time in a ring helps in identifying a faulty node. The

data transmission takes place in only one direction. When a node fails in ring,

it breaks down the whole ring. To overcome this drawback some ring topologies

use dual rings. The topology is not useful to connect large number of computers.

3. Star Topology:

In star topology all the nodes are

connected to a central node called a hub. A node that wants to

send some six data to some other

node on the network, send data to a hub which in turn sends it the destination

node. A hub plays a major role in such networks.

Star topology is easy to install

and reconfigure. If a link fails then it separates the node connected

to link from the network and the

network continues to function. However, if the hub goes down, the entire

network collapses.

4. Tree Topology:

Tree topology is a hierarchy of

various hubs. The entire nodes are connected to one hub or the

other. There is a central hub to

which only a few nodes are connected directly. The central hub, also called

active hub, looks at the incoming bits and regenerates them so that they can

traverse over longer distances. The secondary hubs in tree topology may be

active hubs or passive hubs. The failure of a transmission line separates a

node from the network.

5. Mesh Topology:

A mesh topology is also called

complete topology. In this topology, each node is connected

directly to every oilier node in

the network. That is if there are n nodes then there would be n(n — 1)/2

physical links in the network.

As there are dedicated links, the topology does

not have congestion problems. Further it does not need a special Media Access

Control (MAC) protocol to prevent simultaneous access to the transmission media

since links are dedicated, not shared. The topology also provides data

security. The network can continue to function even in the failure of one of

the links. Fault identification is also easy. The main disadvantage of mesh

topology is the complexity of the network and the cost associated with the

cable length. The mesh topology is not useful for medium to large networks.

6. Hybrid Topology:

Hybrid topology is formed by connecting

two or more topologies together. For example, hybrid topology can be created by

using the bus, star and ring topologies

Question No. 2

What are the applications of Computer Networks?

Answer:-

1. Information:

One of the applications of computer

networks is the ability to provide access to remote information.

• Pay bills; carry out transactions

on bank accounts etc.

• Shop from home by inspecting the

catalogs of thousands of companies available online.

• Ask the newspaper for full

information about your interesting topics such as corrupt politicians, big

fires, football and so on.

• Access information about health,

science, art, business, cooking, sports, travel, and government and so on. All

this is available on the information systems like the World Wide Web (WWW).

2. Communication:

The popular application of computer

networks is electronic mail or e-mail which widely used by

millions of people to send and

receive text messages. With real-time e-mail, remote users can Communicate even

by see and hear each other at the same time. It is also possible to have

virtual meetings called videoconference on-line among remote users.

3. Entertainment:

A

huge and growing application is entertainment. It entertains people by allowing

video demand, and has multiple real-time games etc.

Question No. 3

Explain

about the INTERNET history?

Answer: -

The Internet has

revolutionized many aspects of our daily lives. It has affected the way we do

business as well as the way we spend our leisure time. Count the ways you've

used the Internet recently. Perhaps you've sent electronic mail (e-mail) to a

business associate, paid a utility bill, read a newspaper from a distant city,

or looked up a local movie schedule-all by using the Internet. Or maybe you

researched a medical topic, booked a hotel reservation, chatted with a fellow

Trekkie, or comparison-shopped for a car. The Internet is a communication

system that has brought a wealth of information to our fingertips and organized

it for our use

A Brief

History:

A network is a group of

connected communicating devices such as computers and printers. An internet

(note the lowercase letter i) is two or more networks that can communicate with

each other. The most notable internet is called the Internet (uppercase letter

I), a collaboration of more than hundreds of thousands of interconnected

networks. Private individuals as well as various organizations such as

government agencies, schools, research facilities, corporations, and libraries

in more than 100 countries use the Internet. Millions of people are users. Yet

this extraordinary communication system only came into being in 1969.

In the mid-1960s, mainframe

computers in research organizations were standalone devices. Computers from

different manufacturers were unable to communicate with one another. The

Advanced Research Projects Agency (ARPA) in the Department of Defense (DoD) was

interested in finding a way to connect computers so that the researchers they

funded could share their findings, thereby reducing costs and eliminating

duplication of effort.

The

Internet Today:

The Internet has

come a long way since the 1960s. The Internet today is not a simple

hierarchical structure. It is made up of many wide- and local-area networks

joined by connecting devices and switching stations. It is difficult to give an

accurate representation of the Internet because it is continually changing-new

networks are being added, existing networks are adding addresses, and networks

of defunct companies are being removed. Today most end users who want Internet

connection use the services of Internet service providers (lSPs). There are

international service providers, national service providers, regional service

providers, and local service providers. The Internet today is run by private

companies, not the government. Figure 1.13 shows a conceptual

(not

geographic) view of the Internet.

.

International Internet Service Providers:

At the top of the hierarchy

are the international service providers that connect nations together.

National Internet Service Providers:

The national Internet

service providers are backbone networks created and maintained by specialized

companies. There are many national ISPs operating in North America; some of the

most well known are SprintLink, PSINet, UUNet Technology, AGIS, and internet

Mel. To provide connectivity between the end users, these backbone networks are

connected by complex switching stations (normally run by a third party) called

network access points (NAPs). Some national ISP networks are also connected to

one another by private switching stations called peering points. These

normally operate at a high data rate (up to 600 Mbps).

Regional Internet Service Providers:

Regional internet service

providers or regional ISPs are smaller ISPs that are connected to one or more

national ISPs. They are at the third level of the hierarchy with a smaller data

rate.

Local Internet Service Providers:

Local Internet service providers provide direct

service to the end users. The local ISPs can be connected to regional ISPs or

directly to national ISPs. Most end users are connected to the local ISPs. Note

that in this sense, a local ISP can be a company that just provides Internet

services, a corporation with a network that supplies services to its own

employees, or a nonprofit organization, such as a college or a university, that

runs its own network. Each of these local ISPs can be connected to a regional

or national service provider.

Question No. 4

What is OSI Model? Explain the functions and

protocols and services of each layer?

Answer: -

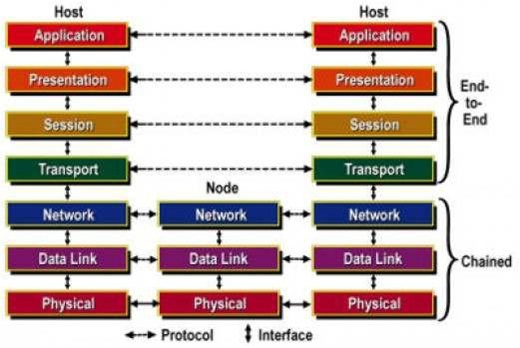

The OSI Reference Model:

The OSI model (minus the physical

medium) is shown in Fig. This model is based on a

proposal developed by the

International Standards Organization (ISO) as a first step toward international

standardization of the protocols used in the various layers (Day and

Zimmermann,

1983). It was revised in 1995(Day,

1995). The model is called the ISO-OSI (Open Systems Interconnection) Reference

Model because it deals with connecting open systems—that is, systems that are

open for communication with other systems. The OSI model has seven layers. The

principles that were applied to arrive at the seven layers can be briefly

summarized as follows:

1. A layer should be created where

a different abstraction is needed.

2. Each layer should perform a

well-defined function.

3. The function of each layer

should be chosen with an eye toward defining internationally standardized

protocols.

4. The layer boundaries should be

chosen to minimize the information flow across the interfaces.

5. The number of layers should be large enough

that distinct functions need not be thrown together in the same layer out of

necessity and small enough that the architecture does not become unwieldy

THE OSI REFERENCE MODEL

1. The Physical Layer:

The physical layer is concerned

with transmitting raw bits over a communication channel. The design issues have to do with making

sure that when one side sends a 1 bit, it is received by the other side as a 1

bit, not as a 0 bit.

2. The Data Link Layer:

The main task of the data link

layer is to transform a raw transmission facility into a line that

appears free of undetected

transmission errors to the network layer. It accomplishes this task by having

the sender break up the input data into data frames (typically a few hundred or

a few thousand bytes) and transmits the frames sequentially. If the service is

reliable, the receiver confirms correct receipt of each frame by sending back

an acknowledgement frame. Another issue that arises in the data link layer (and

most of the higher layers as well) is how to keep a fast transmitter from

drowning a slow receiver in data. Some traffic regulation mechanism is often

needed to let the transmitter know how much buffer space the receiver has at

the moment. Frequently, this flow regulation and the error handling are

integrated.

3. The Network Layer:

The network layer controls the operation

of the subnet. A key design issue is determining how

packets

are routed from source to destination. Routes can be based on static tables

that are ''wired into'' the network and rarely changed. They can also be

determined at the start of each conversation, for example, a terminal session

(e.g., a login to a remote machine). Finally, they can be highly dynamic, being

determined anew for each packet, to reflect the current network load. If too

many packets are present in the subnet at the same time, they will get in one

another's way, forming bottlenecks. The control of such congestion also belongs

to the network layer. More generally, the quality of service provided (delay,

transit time, jitter, etc.) is also a network layer issue. When a packet has to

travel from one network to another to get to its destination,

many problems can arise. The

addressing used by the second network may be different from the first one. The

second one may not accept the packet at all because it is too large. The

protocols may differ, and so on. It is up to the network layer to overcome all

these problems to allow heterogeneous networks to be interconnected. In

broadcast networks, the routing problem is simple, so the network layer is

often thin or even nonexistent.

4. The Transport Layer:

The basic function of the transport

layer is to accept data from above, split it up into smaller units if need be, pass these to the

network layer, and ensure that the pieces all arrive correctly at the other

end. Furthermore, all this must be done efficiently and in a way that isolates

the upper layers from the inevitable changes in the hardware technology. The

transport layer also determines what type of service to provide to the session

layer, and, ultimately, to the users of the network. The most popular type of

transport connection is an error-free point-to-point channel that delivers

messages or bytes in the order in which they were sent. However, other possible

kinds of transport service are the transporting of isolated messages, with no

guarantee about the order of delivery, and the broadcasting of messages to

multiple destinations. The type of service is determined when the connection is

established. The transport layer is a true end-to- end layer, all the way from

the source to the destination. In other words, a program on the source machine

carries on a conversation with a similar program on the destination machine,

using the message headers and control messages. In the lower layers, the

protocols are between each machine and its immediate neighbors, and not between

the ultimate source and destination machines, which may be separated by many

routers.

5. The Session Layer:

The session layer allows users on

different machines to establish sessions between them. Sessions offer various services,

including dialog control (keeping track of whose turn it is to transmit), token

management (preventing two parties from attempting the same critical operation

at the same time), and synchronization (check pointing long transmissions to

allow them to continue from where they were after a crash).

6. The Presentation Layer:

The presentation layer is concerned

with the syntax and semantics of the information transmitted. In order to make it possible for

computers with different data representations to communicate, the data

structures to be exchanged can be defined in an abstract way, along with a

standard encoding to be used ''on the wire.'' The presentation layer manages

these abstract data structures and allows higher-level data structures (e.g.,

banking records), to be defined and exchanged.

7. The Application Layer:

The application layer contains a

variety of protocols that are commonly needed by users. One widely-used application protocol is HTTP

(Hypertext Transfer Protocol), which is the basis for the World Wide Web. When

a browser wants a Web page, it sends the name of the page it wants to the

server using HTTP. The server then sends the page back. Other application

protocols are used for file transfer, electronic mail, and network news.

Question No. 5

Explain the following:- a) LAN

b) MAN

c) WAN

d) ARPANET

Answer: -

a) LAN-Local Area Networks (LAN)

Local area networks, generally

called LANs, are privately-owned networks within a single building or campus of

up to a few kilometers in size. They are widely used to connect personal

computers and workstations in company offices and factories to share resources

(e.g., printers) and exchange information. LANs are distinguished from other

kinds of networks by three characteristics:

a) LAN-Local Area Networks (LAN)

(1) Their size,

(2) Their transmission technology,

and

(3) Their topology.

LANs are restricted in size, which

means that the worst-case transmission time is bounded and known in advance.

Knowing this bound makes it possible to use certain kinds of designs that would

not otherwise be possible. It also simplifies network management. LANs may use

a transmission technology consisting of a cable to which all the machines are

attached, like the telephone company party lines once used in rural areas.

Traditional LANs run at speeds of 10 Mbps to 100 Mbps, have low delay

(microseconds or nanoseconds), and make very few errors

Newer LANs operate at up to 10 Gbps

various topologies are possible for broadcast LANs. Figure shows two of them.

In a bus (i.e., a linear cable) network, at any instant at most one machine is

the master and is allowed to transmit. All other machines are required to

refrain from sending. An arbitration mechanism is needed to resolve conflicts

when two or more machines want to transmit simultaneously. The arbitration

mechanism may be centralized or distributed. IEEE 802.3, popularly called

Ethernet, for example, is a bus-based broadcast network with decentralized

control, usually operating at 10 Mbps to 10 Gbps. Computers on an Ethernet can

transmit whenever they want to; if two or more packets collide, each computer

just waits a random time and tries again later.

A second type of broadcast system is the

ring. In a ring, each bit propagates around on its own, not waiting for the

rest of the packet to which it belongs. Typically, each bit circumnavigates the

entire ring in the time it takes to transmit a few bits, often before the

complete packet has even been transmitted. As with all other broadcast systems,

some rule is needed for arbitrating simultaneous accesses to the ring. Various

methods, such as having the machines take turns, are in use. IEEE 802.5 (the

IBM token ring), is a ring-based LAN operating at 4 and 16 Mbps. FDDI is

another example of a ring network.

b) Metropolitan Area Network:

A metropolitan area network, or

MAN, covers a city. The best-known example of a MAN is the

Cable television network available

in many cities. This system grew from earlier community antenna systems used in

areas with poor over-the-air television reception. In these early systems, a

large antenna was placed on top of a nearby hill and signal was then piped to

the subscribers' houses.

At first, these were

locally-designed, ad hoc systems. Then companies began jumping into the

business, getting contracts from city governments to wire up an entire city.

The next step was television programming and even entire channels designed for

cable only. Often these channels were highly specialized, such as all news, all

sports, all cooking, all gardening, and so on. But from their inception until

the late 1990s, they were intended for television reception only.

To a first approximation, a MAN might

look something like the system shown in Fig.2. In this figure both television

signals and Internet are fed into the centralized head end for subsequent

distribution to people's homes.

Cable television is not the only MAN.

Recent developments in high-speed wireless Internet access resulted in another

MAN, which has been standardized as IEEE 802.16. A MAN is implemented by a

standard called DQDB (Distributed Queue Dual Bus) or IEEE 802.16. DQDB has two

unidirectional buses (or cables) to which all the computers are attached.

c) Wide Area Network:

A wide area network, or WAN, spans

a large geographical area, often a country or continent. It contains a collection of machines

intended for running user (i.e., application) programs. These machines are

called as hosts. The hosts are connected by a communication subnet, or just

subnet for short. The hosts are owned by the customers (e.g., people's personal

computers), whereas the communication subnet is typically owned and operated by

a telephone company or Internet service provider. The job of the subnet is to

carry messages from host to host, just as the telephone system carries words

from speaker to listener. Separation of the pure communication aspects of the

network (the subnet) from the application aspects (the hosts), greatly

simplifies the complete network design.

In most wide area networks, the

subnet consists of two distinct components: transmission lines and switching

elements. Transmission lines move bits between machines. They can be made of

copper wire, optical fiber, or even radio links. In most WANs, the network

contains numerous transmission lines, each one connecting a pair of routers. If

two routers that do not share a transmission line wish to communicate, they

must do this indirectly, via other routers. When a packet is sent from one

router to another via one or more intermediate routers, the packet is received

at each intermediate router in its entirety, stored there until the required

output line is free, and then forwarded. A subnet organized according to this

principle is called a store-and- forward or packet-switched subnet. Nearly all

wide area networks (except those using satellites) have store-and-forward

subnets. When the packets are small and all the same size, they are often

called cells.

The

principle of a packet-switched WAN is so important. Generally, when a process

on some host has a message to be sent to a process on some other host, the

sending host first cuts the message into packets, each one bearing its number

in the sequence. These packets are then injected into the network one at a time

in quick succession. The packets are transported individually over the network

and deposited at the receiving host, where they are reassembled into the

original message and delivered to the receiving process. A stream of packets

resulting from some initial message is illustrated. In this figure, all the

packets follow the route ACE, rather than ABDE or ACDE. In some

networks all packets from a given message must follow the same route; in others

each packed is routed separately. Of course, if ACE is the best route, all

packets may be sent along it, even if each packet is individually routed.

Not all WANs are packet switched. A

second possibility for a WAN is a satellite system. Each router has an antenna

through which it can send and receive. All routers can hear the output from the

satellite, and in some cases they can also hear the upward transmissions of

their fellow routers to the satellite as well. Sometimes the routers are

connected to a substantial point-to-point subnet, with only some of them having

a satellite antenna. Satellite networks are inherently broadcast and are most

useful when the broadcast property is important.

d) ARPANET:

The subnet would consist of

minicomputers called IMPs (Interface Message Processors) Connected by 56-kbps transmission

lines. For high reliability, each IMP would be connected to at least two other

IMPs. The subnet was to be a datagram subnet, so if some lines and IMPs were

destroyed, messages could be automatically rerouted along alternative paths.

Each node of the network was to consist

of an IMP and a host, in the same room, connected by a short wire. A host could

send messages of up to 8063 bits to its IMP, which would then break these up

into packets of at most 1008 bits and forward them independently toward the

destination. Each packet was received in its entirety before being forwarded,

so the subnet was the first electronic store-and-forward packet-switching

network.

Each node of the network was to

consist of an IMP and a host, in the same room, connected by a

short wire. A host could send

messages of up to 8063 bits to its IMP, which would then break these up into

packets of at most 1008 bits and forward them independently toward the

destination. Each packet was received in its entirety before being forwarded,

so the subnet was the first electronic store-and-forward packet-switching

network.

ARPA then put out a tender for

building the subnet. Twelve companies bid for it. After evaluating all the

proposals, ARPA selected BBN, a consulting firm in Cambridge, Massachusetts,

and in December 1968, awarded it a contract to build the subnet and write the

subnet software. BBN chose to use specially modified Honeywell DDP-316

minicomputers with

12K 16-bit words of core memory as the

IMPs. The IMPs did not have disks, since moving parts were considered

unreliable. The IMPs were interconnected by 56-kbps lines leased from telephone

companies. Although 56 kbps is now the choice of teenagers who cannot afford

ADSL or cable, it was then the best money could buy.

The software was split into two parts:

subnet and host. The subnet software consisted of the IMP end of the host-IMP

connection, the IMP-IMP protocol, and a source IMP to destination IMP protocol

designed to improve reliability. The original ARPANET design is shown in

Fig.10. Outside the subnet, software was also needed, namely, the host end of

the host-IMP connection, the host-host protocol, and the application software.

It soon became clear that BBN felt that when it had accepted a message on a

host-IMP wire and placed it on the host-IMP wire at the destination, its job

was done.

Question No. 6

What is TCP/IP Model? Explain the functions

and protocols and services of each layer? Compare it with OSI Model.

Answer: -

The TCP/IP MODEL:-

The TCP/IP reference model was

developed prior to OSI model. The major design goals of this

model were,

1. To connect multiple networks

together so that they appear as a single network.

2. To survive after partial

subnet hardware failures.

3. To provide a flexible

architecture.

Unlike OSI reference model,

TCP/IP reference model has only 4 layers. They are,

1. Host-to-Network Layer

2. Internet Layer

3. Transport Layer

4. Application Layer

1. Host-to-Network Layer:

The TCP/IP reference model does not

really say much about what happens here, except to point

out that the host has to connect to

the network using some protocol so it can send IP packets to it. This protocol

is not defined and varies from host to host and network to network.

2. Internet Layer:

This layer, called the internet

layer, is the linchpin that holds the whole architecture together. Its

job is to permit hosts to inject

packets into any network and have they travel independently to the destination

(potentially on a different network). They may even arrive in a different order

than they were sent, in which case it is the job of higher layers to rearrange

them, if in-order delivery is desired. Note that ''internet'' is used here in a

generic sense, even though this layer is present in the Internet.

The internet layer defines an

official packet format and protocol called IP (Internet Protocol). The job of

the internet layer is to deliver IP packets where they are supposed to go.

Packet routing is clearly the major issue here,

as is avoiding congestion. For these reasons, it is reasonable to say that the

TCP/IP internet layer is similar in functionality to the OSI network layer.

3. The Transport Layer:

The layer above the internet layer

in the TCP/IP model is now usually called the transport layer.

It is designed to allow peer

entities on the source and destination hosts to carry on a conversation, just

as in the OSI transport layer. Two end-to-end transport protocols have been

defined here. The first one, TCP (Transmission Control Protocol), is a reliable

connection- oriented protocol that allows a byte stream originating on one

machine to be delivered without error on any other machine in the internet. It

fragments the incoming byte stream into discrete messages and passes each one

on to the internet layer. At the destination, the receiving TCP process reassembles

the received messages into the output stream. TCP also handles flow control to

make sure a fast sender cannot swamp a slow receiver with more messages than it

can handle.

The second protocol in this layer,

UDP (User Datagram Protocol), is an unreliable, connectionless protocol for

applications that do not want TCP's sequencing or flow control and wish to

provide their own. It is also widely used for one-shot, client-server-type

request-reply queries and applications in which prompt delivery is more important

than accurate delivery, such as transmitting speech or video. Since the model

was developed, IP has been implemented on many other networks.

4. The Application Layer:

The TCP/IP model does not have

session or presentation layers. On top of the transport layer is

the application layer. It contains

all the higher-level protocols. The early ones included virtual terminal

(TELNET), file transfer (FTP), and electronic mail (SMTP), as shown in Fig.6.2.

The virtual terminal protocol allows a user on one machine to log onto a

distant machine and work there. The file transfer protocol provides a way to

move data efficiently from one machine to another. Electronic mail was

originally just a kind of file transfer, but later a specialized protocol

(SMTP) was developed for it. Many other protocols have been added to these over

the years: the Domain Name System (DNS) for mapping host names onto their

network addresses, NNTP, the protocol for moving USENET news articles around,

and HTTP, the protocol for fetching pages on the World Wide Web, and many

others.

Comparison of the OSI and TCP/IP

Reference Models:

The OSI and TCP/IP reference models

have much in common. Both are based on the concept of

a stack of independent protocols.

Also, the functionality of the layers is roughly similar. For example, in both

models the layers up through and including the transport layer are there to

provide an end-to-end, network-independent transport service to processes

wishing to communicate. These layers form the transport provider. Again in both

models, the layers above transport are application-oriented users of the

transport service. Despite these fundamental similarities, the two models also

have many differences.

Three concepts are central to the

OSI model:

1. Services.

2. Interfaces.

3. Protocols.

Probably the biggest contribution

of the OSI model is to make the distinction between these three concepts

explicit. Each layer performs some services for the layer above it. The service

definition tells what the layer does, not how entities above it access it or

how the layer works. It defines the layer's semantics. A layer's interface

tells the processes above it how to access it. It specifies what the parameters

are and what results to expect. It, too, says nothing about how the layer works

inside.

Finally, the peer protocols used in

a layer are the layer's own business. It can use any protocols it wants to, as

long as it gets the job done (i.e., provides the offered services). It can also

change them at will without affecting software in higher layers. The TCP/IP

model did not originally clearly distinguish between service, interface, and

protocol, although people have tried to retrofit it after the fact to make it

more OSI-like. For example, the only real services offered by the internet

layer are SEND IP PACKET and RECEIVE IP PACKET.

As a consequence, the protocols in

the OSI model are better hidden than in the TCP/IP model and can be replaced

relatively easily as the technology changes. Being able to make such changes is

one of the main purposes of having layered protocols in the first place. The

OSI reference model was devised before the corresponding protocols were

invented.

This ordering means that the model

was not biased toward one particular set of protocols, a fact that made it

quite general. The downside of this ordering is that the designers did not have

much experience with the subject and did not have a good idea of which

functionality to put in which layer.

Another difference is in the area of

connectionless versus connection-oriented communication. The OSI model supports

both connectionless and connection-oriented communication in the network layer,

but only connection-oriented communication in the transport layer, where it

counts (because the transport service is visible to the users). The TCP/IP

model has only one mode in the network layer (connectionless) but supports both

modes in the transport layer, giving the users a choice. This choice is

especially important for simple request-response protocols.

Question No. 7

Explain about the different types of transmission Medias

in computer networks?

Answer: -

Network media is the actual path over which an

electrical signal travels as it moves from one component to another. There are some

common types of network media, including twisted-pair Cable, coaxial cable,

fiber-optic cable, and wireless.

GUIDED MEDIA

Guided media, which are those that provide a conduit from one device to another, include twisted-pair cable, coaxial cable, and fiber-optic cable. A signal traveling along any of these media is directed and contained by the physical limits of the medium. Twisted-pair and coaxial cable use metallic (copper) conductors that accept and transport signals in the form of electric current. Optical fiber is a cable that accepts and transports signals in the form of light.

Twisted-Pair Cable

A twisted pair consists of two conductors (normally copper), each with its own plastic insulation, twisted together, as shown in following figure.

One of the wires is used to carry signals to the receiver, and the other is used only as a ground reference. The receiver uses the difference between the two.In addition to the signal sent by the sender on one of the wires, interference (noise) and crosstalk may affect both wires and create unwanted signals. If the two wires are parallel, the effect of these unwanted signals is not the same in both wires because they are at different locations relative to the noise or crosstalk sources (e.g., one is closer and the other is farther). This results in a difference at the receiver. By twisting the pairs, a balance is maintained. For example, suppose in one twist, one wire is closer to the noise source and the other is farther; in the next twist, the reverse is true. Twisting makes it probable that both wires are equally affected by external influences (noise or crosstalk). This means that the receiver, which calculates the difference between the two, receives no unwanted signals. The unwanted signals are mostly canceled out. From the above discussion, it is clear that the number of twists per unit of length (e.g., inch) has some effect on the quality of the cable.

Unshielded Versus Shielded Twisted-Pair Cable

The most common twisted-pair cable used in communications is referred to as unshielded twisted-pair (UTP). IBM has also produced a version of twisted-pair cable for its use, called shielded twisted-pair (STP). STP cable has a metal foil or braided mesh covering that encases each pair of insulated conductors. Although metal casing improves the quality of cable by preventing the penetration of noise or crosstalk, it is bulkier and more expensive. Below figure

ii.Shielded Twisted-Pair Cable

Ø Shielded twisted-pair (STP)

cable combines the techniques of shielding, cancellation, and wire twisting.

Each pair of wires is wrapped in a metallic foil .The four pairs of wires then

are wrapped in an overall metallic braid or foil, usually 150-ohm cable. STP

usually is installed with STP data connector, which is created especially for

the STP cable. However, STP cabling also can use the same RJ connectors that

UTP uses.

Ø Although

STP prevents interference better than UTP, it is more expensive and difficult

to install. In addition, the metallic shielding must be grounded at both ends.

If it is improperly grounded, the shield acts like an antenna and picks up unwanted

signals. Because of its cost and difficulty with termination, STP is rarely

used in Ethernet networks. STP is primarily used in Europe.

The following summarizes the features of STP cable:

Ø Speed

and throughput—10 to 100 Mbps

Ø Average

cost per node—Moderately expensive

Ø Media

and connector size—Medium to large

Ø Maximum

cable length—100 m (short)

Coaxial Cable

Ø Coaxial cable consists of a hollow

outer cylindrical conductor that surrounds a single inner wire made of two

conducting elements. One of these elements, located in the center of the cable,

is a copper conductor. Surrounding the copper conductor is a layer of flexible

insulation. Over this insulating material is a woven copper braid or metallic

foil that acts both as the second wire in the circuit and as a shield for the

inner conductor. This second layer, or shield, can help reduce the amount of

outside interference. Covering this shield is the cable jacket.

Ø Coaxial

cable supports 10 to 100 Mbps and is relatively inexpensive, although it is

more costly than UTP on a per-unit length. However, coaxial cable can be

cheaper for a physical bus topology because less cable will be needed. Coaxial

cable can be cabled over longer distances than twisted-pair cable. For example,

Ethernet can run approximately 100 meters (328 feet) using twisted-pair

cabling. Using coaxial cable increases this distance to 500m (1640.4 feet).

Ø For

LANs, coaxial cable offers several advantages. It can be run with fewer boosts

from repeaters for longer distances between network nodes than either STP or

UTP cable. Repeaters regenerate the signals in a network so that they can cover

greater distances. Coaxial cable is less expensive than fiber-optic cable, and

the technology is well known; it has been used for many years for all types of data

communication.

Ø When

working with cable, you need to consider its size. As the thickness, or

diameter, of the cable increases, so does the difficulty in working with it.

Many times cable must be pulled through existing conduits and troughs that are

limited in size. Coaxial cable comes in a variety of sizes. The largest

diameter (1 centimeter [cm]) was specified for use as Ethernet backbone cable

because historically it had greater transmission length and noise-rejection

characteristics. In the past, coaxial cable with an outside diameter of only

0.35 cm (sometimes referred to as Thinnet)

was used in Ethernet network

The following summarizes the features of

coaxial cables:

Ø Speed

and throughput—10 to 100 Mbps

Ø Average

cost per node—Inexpensive

Ø Media

and connector size—Medium

Maximum cable length—500 m (medium)

Fiber-Optic Cable

A fiber-optic cable is made of glass or plastic and transmits signals in the form of light. To understand optical fiber, we first need to explore several aspects of the nature of light. Light travels in a straight line as long as it is moving through a single uniform substance. If a ray of

light traveling through one substance suddenly enters another substance (of a different density), the ray changes direction. Below figure shows how a ray of light changes direction when going from a denser to a less dense substance. As the figure shows, if the angle of incidence I (the angle the ray makes with the line perpendicular to the interface between the two substances) is less than the critical angle, the ray refracts and moves closer to the surface. If the angle of incidence is equal to the critical angle, the light bends along the interface. If the angle is greater than the critical angle, the ray reflects (makes a turn) and travels again in the denser

substance . Note that the critical angle is a property of the substance, and its value differs from one substance to another. Optical fibers use reflection to guide light through a channel. A glass or plastic core is surrounded by a cladding of less dense glass or plastic. The difference in density of the two materials must be such that a beam of light moving through the core is reflected off the cladding instead of being refracted into it. See below figure.

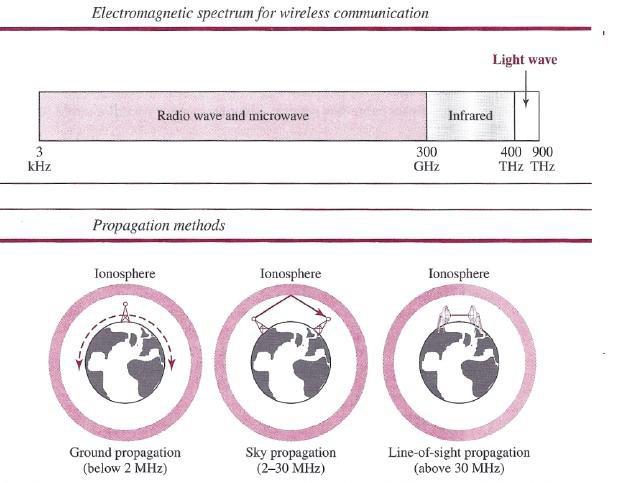

UNGUIDED MEDIA: WIRELESS

Unguided medium transport electromagnetic waves without using a physical conductor. This type of communication is often referred to as wireless communication. Signals are normally broadcast through free space and thus are available to anyone who has a device capable of receiving them. Below figure 7.17 shows the part of the electromagnetic spectrum, ranging from 3 kHz to 900 THz, used for wireless communication. Unguided signals can travel from the source to the destination in several ways: ground propagation, sky propagation, and line-of-sight propagation, as shown in below figure.

In ground propagation, radio waves travel through the lowest portion of the atmosphere, hugging the earth. These low-frequency signals emanate in all directions from the transmitting antenna and follow the curvature of the planet. Distance depends on the amount of power in the signal: The greater the power, the greater the distance. In sky propagation, higher-frequency radio waves radiate upward into the ionosphere (the layer of atmosphere where particles exist as ions) where they are reflected back to earth. This type of transmission allows for greater distances with lower output power. In line-of-sight propagation, very high-frequency signals are transmitted in straight lines directly from antenna to antenna.

The section of the electromagnetic spectrum defined as radio waves and microwaves is divided into eight ranges, called bands, each regulated by government authorities. These bands are rated from very low frequency (VLF) to extremely high frequency (EHF). Below table lists these bands, their ranges, propagation methods, and some applications

Radio Waves

Although there is no clear-cut demarcation between radio waves and microwaves, electromagnetic waves ranging in frequencies between 3 kHz and 1 GHz are normally called radio waves; waves ranging in frequencies between I and 300 GHz are called microwaves. However, the behavior of the waves, rather than the frequencies, is a better criterion for classification. Radio waves, for the most part, are omnidirectional. When an antenna transmits radio waves, they are propagated in all directions. This means that the sending and receiving antennas do not have to be aligned. A sending antenna sends waves that can be received by any receiving antenna. The omnidirectional property has a disadvantage, too. The radio waves transmitted by one antenna are susceptible to interference by another antenna that may send signals using the same frequency or band. Radio waves, particularly those waves that propagate in the sky mode, can travel long distances. This makes radio waves a good candidate for long- distance broadcasting such as AM radio.

Omni directional Antenna

Radio waves use omni directional antennas that send out signals in all directions. Based on the wavelength, strength, and the purpose of transmission, we can have several types of antennas. Below Figure shows an omni directional antenna.

Microwaves

Electromagnetic waves having frequencies between 1 and 300 GHz are called microwaves. Microwaves are unidirectional. When an antenna transmits microwaves, they can be narrowly focused. This means that the sending and receiving antennas need to be aligned. The unidirectional property has an obvious advantage. A pair of antennas can be aligned without interfering with another pair of aligned antennas.

The following describes some characteristics of microwave propagation:

Microwave propagation is line-of-sight. Since the towers with the mounted antennas need to be in direct sight of each other, towers that are far apart need to be very tall. The curvature of the earth as well as other blocking obstacles does not allow two short towers to communicate by using microwaves. Repeaters are often needed for long distance communication.

Very high-frequency microwaves cannot penetrate walls. This characteristic can be a disadvantage if receivers are inside buildings.

The microwave band is relatively wide, almost 299 GHz. Therefore wider subbands can be assigned, and a high data rate is possible.

Use of certain portions of the band requires permission from authorities.

Unidirectional Antenna

Microwaves need unidirectional antennas that send out signals in one direction. Two types of antennas are used for microwave communications: the parabolic dish and the horn .

A parabolic dish antenna is based on the geometry of a parabola: Every line parallel to the line of symmetry (line of sight) reflects off the curve at angles such that all the lines intersect in a common point called the focus. The parabolic dish works as a funnel, catching a wide range of waves and directing them to a common point. In this way, more of the signal is recovered than would be possible with a single-point receiver.

Outgoing transmissions are broadcast through a horn aimed at the dish. The microwaves

hit the dish and are deflected outward in a reversal of the receipt path. A horn antenna looks like a gigantic scoop. Outgoing transmissions are broadcast up a stem (resembling a handle) and deflected outward in a series of narrow parallel beams by the curved head. Received transmissions are collected by the scooped shape of the horn, in a manner similar to the parabolic dish, and are deflected down into the stem.

Applications

Microwaves, due to their unidirectional properties, are very useful when unicast (one to- one) communication is needed between the sender and the receiver. They are used in cellular phone, satellite networks, and wireless LANs

Microwaves are used for unicast communication such as cellular telephones, satellite networks, and wireless LANs.

Infrared

Infrared waves, with frequencies from 300 GHz to 400 THz (wavelengths from 1 mm to 770 nrn), can be used for short-range communication. Infrared waves, having high frequencies, cannot penetrate walls. This advantageous characteristic prevents interference between one system and another; a short-range communication system in one room cannot be affected by another system in the next room. When we use our infrared remote control, we do not interfere with the use of the remote by our neighbors. However, this same characteristic makes infrared signals useless for long-range communication. In addition, we cannot use infrared waves outside a building because the sun's rays contain infrared waves that can interfere with the communication.

Applications

The infrared band, almost 400 THz, has an excellent potential for data transmission. Such a wide bandwidth can be used to transmit digital data with a very high data rate. The Infrared Data Association elrDA), an association for sponsoring the use of infrared waves, has established standards for using these signals for communication between devices such as keyboards, mice, PCs, and printers. For example, some manufacturers provide a special port called the IrDA port that allows a wireless keyboard to communicate with a PC. The standard originally defined a data rate of 75 kbps for a distance up to 8 m. The recent standard defines a data rate of 4 Mbps.

Infrared signals defined by IrDA transmit through line of sight; the IrDA port on the keyboard needs to point to the PC for transmission to occur. Infrared signals can be used for short-range communication in a closed area using line-of-sight propagation.

Question No. 8

What are the various types of error correcting techniques?

Answer:

Ø Error detection is the detection of errors caused by noise or other

impairments during transmission from the transmitter to the receiver.

Ø Error correction is the detection of errors and reconstruction of the

original, error-free data. Introduction

Implementation

Error correction may generally be realized in two

different ways:

Ø Automatic repeat request (ARQ)

(sometimes also referred to as backward error correction): This is an

error control technique whereby an error detection scheme is combined with

requests for retransmission of erroneous data.

Ø Forward error correction (FEC): The sender encodes the data using an error-correcting

code (ECC) prior to transmission. The additional information (redundancy)

added by the code is used by the receiver to recover the original data.

Error detection schemes

Ø Error detection is most commonly realized using a suitable hash function (or checksum algorithm).

A hash function adds a fixed-length tag to a message, which enables

receivers to verify the delivered message by re-computing the tag and comparing

it with the one provided.

Ø There exists a vast variety of different hash function designs.

However, some are of particularly widespread use because of either their

simplicity or their suitability for detecting certain kinds of errors (e.g.,

the cyclic redundancy check's

performance in detecting burst errors).

Ø Random-error-correcting codes

based on minimum distance

coding can provide a suitable alternative to hash functions when a strict

guarantee on the minimum number of errors to be detected is desired

Ø Parity

bits

A parity bit is a bit that

is added to a group of source bits to ensure that the number of set bits (i.e.,

bits with value 1) in the outcome is even or odd. It is a very simple scheme

that can be used to detect single or any other odd number (i.e., three, five, etc.)

of errors in the output. An even number of flipped bits will make the parity

bit appear correct even though the data is erroneous.

Ø Checksums

A checksum of a message is a

modular arithmetic sum of message code words of

a fixed word length (e.g., byte values). The sum may be negated by means of a ones'-complement

operation prior to transmission to detect errors resulting in all-zero

messages.

Checksum schemes include parity bits,

check digits,

and longitudinal redundancy checks.

Ø Cyclic

redundancy checks (CRCs)

The polynomial code, also known as a CRC

(Cyclic Redundancy Check).

A cyclic

redundancy check (CRC) is a single-burst-error-detecting cyclic code

and non-secure hash function designed to detect accidental

changes to digital data in computer networks. It is characterized by

specification of a so-called generator polynomial, which is used as the divisor

in a polynomial long division over a finite field,

taking the input data as the dividend, and where the remainder

becomes the result.

Even parity is a

special case of a cyclic redundancy check, where the single-bit CRC is

generated by the divisor x + 1.

Polynomial

codes are based upon treating bit strings as representations of polynomials

with coefficients of 0 and 1 only. A k-bit frame is regarded as the coefficient

list for a polynomial with k terms, ranging from xk-1 to x0. Such a polynomial

is said to be of degree k - 1. The high order (leftmost) bit is the coefficient

of xk-1; the next bit is the coefficient of xk-2, and so on. For example,

110001 has 6 bits and thus represent a six-term polynomial with coefficients 1,

1, 0, 0, 0, and 1: x5 + x4 + x0.

Polynomial

arithmetic is done modulo 2, according to the rules of algebraic field theory.

There are no carries for addition or borrows for subtraction. Both addition and

subtraction are identical to exclusive OR. For example: Long division is

carried out the same way as it is in binary except that the subtraction is done

modulo 2, as above. A divisor is said ''to go into'' a dividend if the dividend

has as many bits as the divisor. When the polynomial code method is employed,

the sender and receiver must agree upon a generator polynomial, G(x), in

advance. Both the high- and low-order bits of the generator must be 1. To

compute the checksum for some frame with m bits, corresponding to the

polynomial M(x), the frame must be longer than the generator polynomial. The

idea is to append a checksum to the end of the frame in such a way that the

polynomial represented by the check summed frame is divisible by G(x). When the

receiver gets the check summed frame, it tries dividing it by G(x). If there is

a remainder, there has been a transmission error.

The

algorithm for computing the checksum is as follows:

1. Let r be the degree of G(x).

Append r zero bits to the low-order end of the frame so it now contains m + r

bits and corresponds to the polynomial xr M(x).

2. Divide the bit string

corresponding to G(x) into the bit string corresponding to xr M(x), using

modulo 2 divisions

3. Subtract the remainder (which is

always r or fewer bits) from the bit string corresponding to xr M(x) using

modulo 2 subtractions. The result is the checksummed frame to be transmitted.

Callits polynomial T(x).

calculation for a

frame 1101011011 using the generator G(x) = x4 + x+ 1

Error-correcting codes

Any error-correcting code can be used

for error detection. A code with minimum Hamming distance, d, can detect up to d − 1 errors in a code

word. Using minimum-distance-based error-correcting codes for error detection

can be suitable if a strict limit on the minimum number of errors to be

detected is desired.

Codes with minimum Hamming distance d

= 2 are degenerate cases of error-correcting codes, and can be used to detect

single errors. The parity bit is an example of a single-error-detecting code.

Question no 9.

Explain about Sliding

Window Protocols?

Answer:

In most

practical situations, there is a need for transmitting data in both directions.

One way of achieving full duplex data transmission is to have two separate

communication channels and use each one for simplex data traffic (in different

directions). If this is done, we have two separate physical circuits, each with

a ''forward'' channel (for data) and a ''reverse'' channel (for

acknowledgements). In both cases the bandwidth of the reverse channel is almost

entirely wasted. In effect, the user is paying for two circuits but using only

the capacity of one. A better idea is to use the same circuit for data in both

directions. After all, in protocols 2 and 3 it was already being used to

transmit frames both ways, and the reverse channel has the same capacity as the

forward channel. In this model the data frames from A to B are intermixed with

the acknowledgement frames from A to B. By looking at the kind field in the

header of an incoming frame, the receiver can tell whether the frame is data or

acknowledgement.

Although

interleaving data and control frames on the same circuit is an improvement over

having two separate physical circuits, yet another improvement is possible.

When a data frame arrives, instead of immediately sending a separate control

frame, the receiver restrains itself and waits until the network layer passes

it the next packet. The acknowledgement is attached to the outgoing data frame

(using the ACK field in the frame header). In effect, the acknowledgement gets

a free ride on the next outgoing data frame. The technique of temporarily

delaying outgoing acknowledgements so that they can be hooked onto the next

outgoing data frame is known as piggybacking. The principal advantage of using

piggybacking over having distinct acknowledgement frames is a better use of the

available channel bandwidth. The ACK field in the frame header costs only a few

bits, whereas a separate frame would need a header, the acknowledgement, and a

checksum. In addition, fewer frames sent means fewer ''frame arrival''

interrupts, and perhaps fewer buffers in the receiver, depending on how the

receiver's software is organized. In the next protocol to be examined, the

piggyback field costs only 1 bit in the frame header. It rarely costs more than

a few bits.

A

sliding window of size 1, with a 3-bit sequence number (a) Initially (b) After the first frame has been sent (c) After the

first frame has been received (d) After the first acknowledgement has been

received.

Since frames

currently within the sender's window may ultimately be lost or damaged in

transit, the sender must keep all these frames in its memory for possible

retransmission. Thus, if the maximum window size is n, the sender needs n

buffers to hold the unacknowledged frames. If the window ever grows to its

maximum size, the sending data link layer must forcibly shut off the network

layer until another buffer becomes free. The receiving data link layer's window

corresponds to the frames it may accept. Any frame falling outside the window

is discarded without comment. When a frame whose sequence number is equal to

the lower edge of the window is received, it is passed to the network layer, an

acknowledgement is generated, and the window is rotated by one. Unlike the

sender's window, the receiver's window always remains at its initial size. Note

that a window size of 1 means that the data link layer only accepts frames in

order, but for larger windows this is not so. The network layer, in contrast,

is always fed data in the proper order, regardless of the data link layer's

window size. Figure hows an example with a maximum window size of 1.

A One-Bit Sliding Window

Protocol

Before

tackling the general case, let us first examine a sliding window protocol with

a maximum window size of 1. Such a protocol uses stop-and-wait since the sender

transmits a frame and waits for its acknowledgement before sending the next

one.. Like the others, it starts out by defining some variables.

Next_frame_to_send tells which frame the sender is trying to send. Similarly, frame

expected tells which frame the receiver is expecting. In both cases, 0 and 1

are the only possibilities.

Under

normal circumstances, one of the two data link layers goes first and transmits

the first frame. In other words, only one of the data link layer programs

should contain the to physical layer and start timer procedure calls outside

the main loop. In the event that both data link layers start off

simultaneously, a peculiar situation arises, as discussed later. The starting

machine fetches the first packet from its network layer, builds a frame from

it, and sends it. When this (or any) frame arrives, the receiving data link

layer checks to see if it is a duplicate, just as in protocol 3. If the frame

is the one expected, it is passed to the network layer and the receiver's

window is slid up. The acknowledgement field contains the number of the last

frame received without error. If this number agrees with the sequence number of

the frame the sender is trying to send, the sender knows it is done with the

frame stored in buffer and can fetch the next packet from its network layer. If

the sequence number disagrees, it must continue trying to send the same frame.

Whenever a frame is received, a frame is also sent back. Now let us examine

protocol 4 to see how resilient it is to pathological scenarios. Assume that

computer A is trying to send its frame 0 to computer B and that B is trying to

send its frame 0 to A. Suppose that A sends frame to B, but A's timeout

interval is a little too short. Consequently, A may time out repeatedly,

sending a series of identical frames, all with seq = 0 and ACK = 1.

Question No. 10

Explain about the Elementary Data Link Layer Protocols?

Answer:

An Unrestricted Simplex Protocol:

As an initial example we will consider a protocol

that is as simple as it can be. Data are transmitted in one direction only.

Both the transmitting and receiving network layers are always ready. Processing

time can be ignored. Infinite buffer space is available. And best of all, the

communication channel between the data link layers never damages or loses

frames. This thoroughly unrealistic protocol, which we will nickname ''utopia''

.

The protocol consists of two distinct procedures, a

sender and a receiver. The sender runs in the data link layer of the source

machine, and the receiver runs in the data link layer of the destination

machine. No sequence numbers or acknowledgements are used here, so MAX_SEQ is

not needed. The only event type possible is frame_arrival (i.e., the arrival of

an undamaged frame).

The sender is in an infinite while loop just pumping

data out onto the line as fast as it can. The body of the loop consists of

three actions: go fetch a packet from the (always obliging) network layer,

construct an outbound frame using the variable s, and send the frame on its

way. Only the info field of the frame is used by this protocol, because the

other fields have to do with error and flow control and there are no errors or

flow control restrictions here. The receiver is equally simple. Initially, it

waits for something to happen, the only possibility being the arrival of an

undamaged frame. Eventually, the frame arrives and the procedure wait_for_event

returns, with event set to frame_arrival (which is ignored anyway). The call to

from_physical_layer removes the newly arrived frame from the hardware buffer

and puts it in the variable r, where the receiver code can get at it. Finally,

the data portion is passed on to the network layer, and the data link layer

settles back to wait for the next frame, effectively suspending itself until the

frame arrives.

A Simplex

Stop-and-Wait Protocol:

The

main problem we have to deal with here is how to prevent the sender from

flooding the receiver with data faster than the latter is able to process them.

In essence, if the receiver requires a time Δt to execute from_physical_layer

plus to_network_layer, the sender must transmit at an average rate less than

one frame per time Δt. Moreover, if we assume that no automatic buffering and

queuing are done within the receiver's hardware, the sender must never transmit

a new frame until the old one has been fetched by from_physical_layer, lest the

new one overwrite the old one. In certain restricted circumstances (e.g.,

synchronous transmission and a receiving data link layer fully dedicated to

processing the one input line), it might be possible for the sender to simply

insert a delay into protocol 1 to slow it down sufficiently to keep from

swamping the receiver. However, more usually, each data link layer will have

several lines to attend to, and the time interval between a frame arriving and

its being processed may vary considerably. If the network designers can

calculate the worst-case behavior of the receiver, they can program the sender

to transmit so slowly that even if every frame suffers the maximum delay, there

will be no overruns. The trouble with this approach is that it is too

conservative. It leads to a bandwidth utilization that is far below the

optimum, unless the best and worst cases are almost the same (i.e., the

variation in the data link layer's reaction time is small).

A more general

solution to this dilemma is to have the receiver provide feedback to the

sender. After having passed a packet to its network layer, the receiver sends a

little dummy frame back to the sender which, in effect, gives the sender

permission to transmit the next frame. After having sent a frame, the sender is

required by the protocol to bide its time until the little dummy (i.e.,

acknowledgement) frame arrives. Using feedback from the receiver to let the

sender know when it may send more data is an example of the flow control

mentioned earlier.

Protocols

in which the sender sends one frame and then waits for an acknowledgement

before proceeding are called stop-and-wait.

Question No. 11

Explain the following:

i. TCP

ii.

UDP

Answer: -

I. TCP- Transmission Control Protocol

TCP (Transmission Control Protocol)

was specifically designed to provide a reliable end-to- end byte stream over an

unreliable internetwork. An internetwork differs from a single network because